Is my data used by AIs?

When using a AI assistant, whether for decision-making, writing or for everyday tasks, we all ask ourselves the same question: What happens to the data I share?

We all want to win productivity thanks to these tools, without risking our sensitive data being exposed.

Why does this question come up again and again?

Using an AI assistant in meetings is convenient... but it also means entrusting your strategic discussions to third-party technology. Whether it's to organize teamwork, automate tasks, or analyze information, AI tools are everywhere. And naturally, this presence raises a legitimate question: What really happens to the data we share?

In 2024, Meta announced that it wanted to use content shared by European users to train its AIs, unless explicitly opposed. This decision quickly aroused a lively controversy, in particular led by the NOYB association, which saw it as a possible violation of the RGPD. (source: The world)

In 2023, the social network X (formerly Twitter) activated by default a setting allowing its subsidiary XAi to use user posts to train its model. Many discovered it after the fact, without clear information beforehand. (Source: The world)

These examples show how widespread data collection is, even among the largest organizations. It's a reminder that we should always be careful about how our information is used.

The concrete risks when data is used by AI

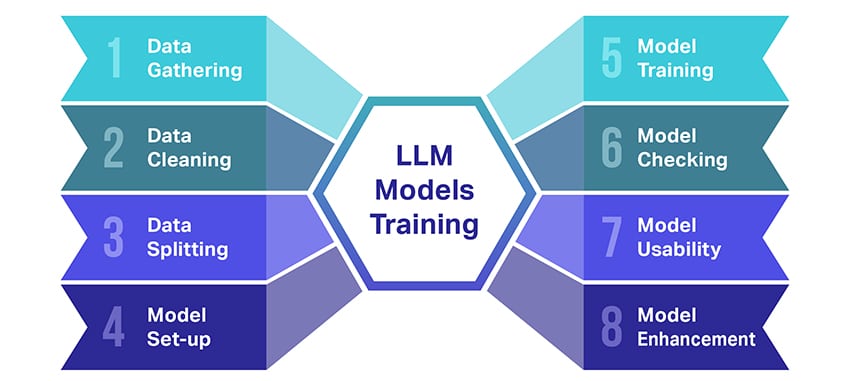

Talk about data processing by AI is not abstract: it is real information, often sensitive, that can be used by artificial intelligence models. LLMs (Large Language Models), like ChatGPT (Open AI) or Mistral AI, work by training on Billions of data to upgrade their understanding and their performance. For innovating and stay competitive, these companies must continue to train their models on an ongoing basis.

The problem is that thousands of users provide data to these systems every day, sometimes in models that are not completely secure or whose storage is not controlled. Without clear guarantees, it can be difficult to know if the informations shared remain Confidential or are used for the overall training of the models.

Faced with these risks and the multiplication of uses ofBusiness AI, Europe reacted with a strict legal framework to protect personal data and supervise their processing: the RGPD. This regulation imposes precise rules on the collection, storage and use of data, in order to secure the privacy of users and ensure that sensitive information is not exposed.

RGPD and AI: the regulation that should reassure

The RGPD (General Data Protection Regulation) plays a fundamental role in regulating the use of personal data.

According to the CNIL, all businesses need to follow a few simple rules when using your data:

- Legality : your data must be used within a legal framework.

- Transparency : you need to know what information is being collected and why.

- Limiting data : only the information that is really needed should be used.

- Accuracy : the data must be correct and updated if necessary.

- Limited time : your data should not be kept longer than necessary.

- security : they must be protected against any leak or unauthorized use.

Let's take an example: your business uses a AI assistant to automate repetitive tasks. If the tool remembers the personal information of your employees or strategic decisions, the RGPD Impose that you are notified of this storage and that you can request the suppression or anonymization of this information.

With these rules, the GDPR allows businesses to take advantage of the benefits of AI while protecting the privacy of their teams and their strategic information.

Why do businesses use (or want to use) your data?

Behind every advance in artificial intelligence is... our data. Each interaction, each shared information allows the tools toBusiness AI Better understand, To learn And of improve.

In concrete terms, businesses use this data for two main purposes:

- Improve the performance of their tools : the more a system receives Of information (exchanges, documents, team interactions) the more it becomes precise and efficient. It is the principle ofMachine learning : the tool learns from what it sees in order to respond better afterwards.

- Personalize the experience : One AI assistant well-designed can adapt to habits of a team, suggest the important points, highlight certain priorities or adjust its operation according to uses.

The problem arises when this information is reused to train global models, incorporating data from multiple companies or users, without clear information or strict anonymization. In this case, the confidential data Can circulate without you being aware of it.

Confidentiality in business: the strategic challenge

When it comes to sensitive information (strategy, finances, HR), confidentiality becomes an imperative. Your meetings cannot be treated as anonymous flows.

With teleworking, employees share more through videoconferences. AI assistants integrated into meeting platforms retrieve voice, verbal, and contextual data. If the tool is not designed to meet security and confidentiality criteria, the risk is increasing.

Towards sovereign and respectful solutions

Faced with these challenges, several initiatives are emerging to reconcile AI security and performance.

Les sovereign solutions, European or French, guarantee that the data remains under local legislation. France, for example, encourages these architectures to protect the AI privacy in public and private settings.

Mistral AI, a European start-up, offers “open” AI models, also called “Open-source”. These models aim to use AI while protecting your data, leaving the companies host and control themselves information instead of sending it to outside servers.

Chez Delos, the challenge is to offer an efficient AI assistant based on various models (ChatGPT, Mistral, Claude...) while protecting your data. They are stored in France, encrypted and processed according to the principles of the RGPD, which guarantees both maximum security and total opacity with respect to language models thanks to encryption

Conclusion: yes, your data can be used BUT you have rights

So, finally: is your data used by AIs? The answer is “yes”, potentially, if you use an AI assistant or a transcription tool without precautions. But in the European Union, the RGPD IA imposes strong obligations of transparency, consent, suppression and minimization.

According to a study by the National Cybersecurity Alliance (NCA) published in September 2024, nearly 40% of employees say they have shared sensitive information with AI tools without their employer being aware of it. These numbers highlight the importance of integrating security and privacy from the start of enterprise AI tools, and of giving users real control over their data.

How do you choose the right AI assistant for you?

- Performance : the tool must be effective and reliable, capable of providing accurate and relevant results.

- Transparency and control : you need to know what AI does with your data, how to delete it if necessary, and where it's stored.

- Sovereignty and compliance : choose a tool that stores data in France or Europe, respects the RGPD and does not send your information to countries without equivalent protection.

If you want to test a solution that combines performance, security and collaboration, do not hesitate to try the platform for free by clicking on this link: Try Delos for free.

Ready to begin

with Delos ?

Start now with 1000 free credit or ask for personalized support.

.png)